Table of Contents

Introduction

Generative AI has swiftly become the driving force behind modern technology, transforming the way teams create, automate, and innovate. With advancements happening at breakneck speed, getting a grip on its language isn’t just beneficial — it’s crucial.

No matter if you’re in product, engineering, marketing, or data, mastering Generative AI lingo can set you apart and keep you ahead of the game. After all, clear language leads to clear execution.

This guide simplifies the essential terms every tech professional should be familiar with, making the complicated feel more approachable and the future seem a bit less daunting — and a whole lot more thrilling.

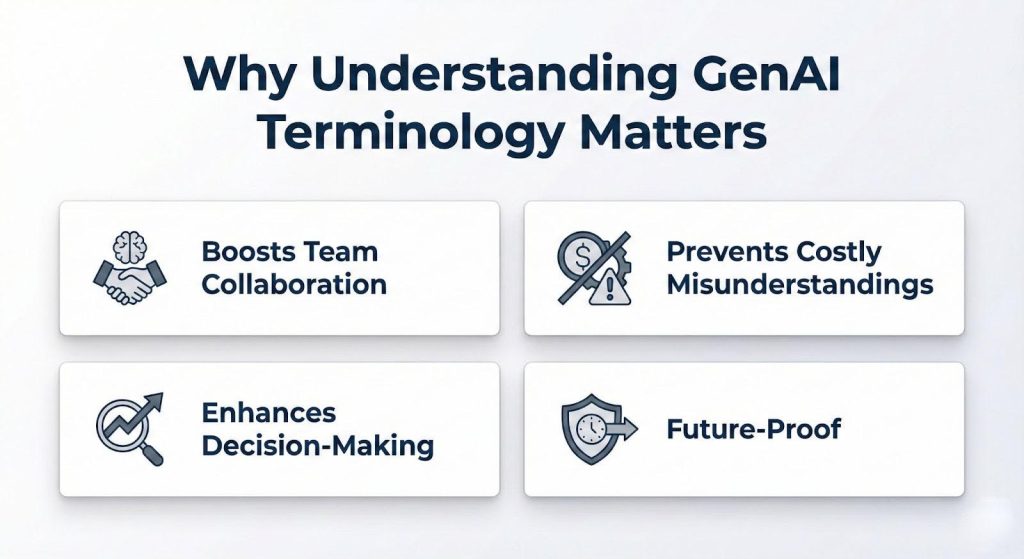

Why Understanding GenAI Terminology Matters

Having the right vocabulary is key to clear communication and smarter work. Here are the must-know terms that will help you connect with every GenAI expert out there.

- Boosts Team Collaboration: When product managers, developers, designers, and analysts are all speaking the same AI lingo, projects run more smoothly and efficiently.

- Prevents Costly Misunderstandings: Using the wrong terms can lead to incorrect assumptions, delays, or even flawed features. Being precise saves time and resources.

- Enhances Decision-Making: Understanding the essential terms allows you to assess AI tools, vendors, and capabilities with greater clarity.

- Future-Proof: The more fluent you become, the easier it is to adapt as AI continues to evolve.

Foundational Terms: The Core Concepts

These essential concepts are the foundation of every GenAI system. Grasping them allows professionals to understand how contemporary AI operates at its core.

- Generative AI: A branch of artificial intelligence focused on producing original content such as text, images, audio, or code. It uses learned patterns to generate outputs that resemble human creativity and reasoning.

- Large Language Models (LLMs): Extremely advanced models trained on vast datasets to understand and produce natural language. They interpret context, answer questions, and generate responses with near-human fluency.

- Neural Networks: Computational structures inspired by the human brain, consisting of interconnected layers that process inputs. They allow machines to recognize patterns, learn relationships, and make predictions.

- Parameters: Internal values learned during model training that define how the model behaves. The number and quality of parameters significantly influence accuracy, reasoning ability, and output quality

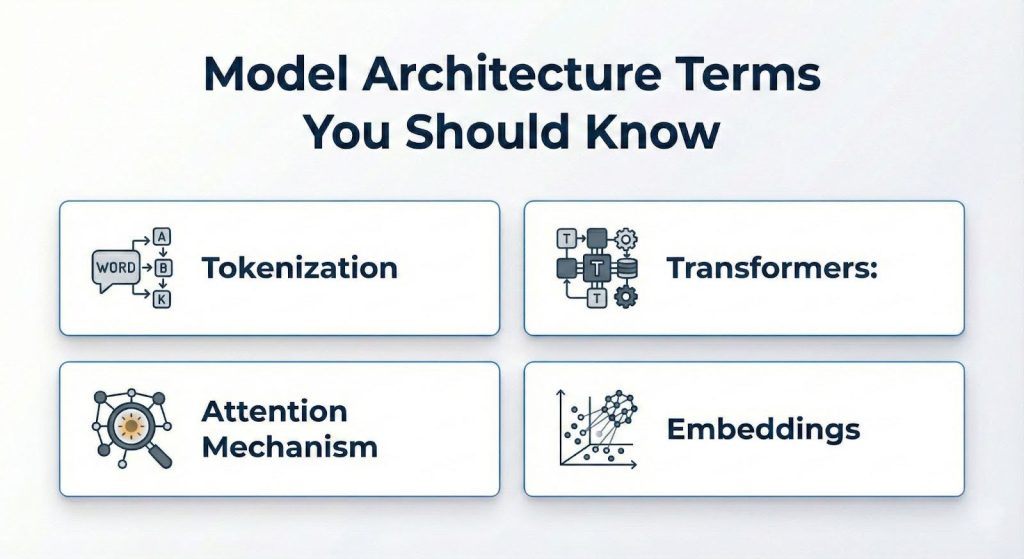

Model Architecture Terms You Should Know

These terms break down the key structural and functional elements that drive today’s AI models. Grasping these concepts gives you a clearer picture of how GenAI systems work to process and create information.

- Tokenization: This is all about breaking down text into smaller pieces, like words or subwords. It helps the model understand and analyze language more effectively by turning text into numbers.

- Transformers: This model architecture has changed the game in AI by allowing for parallel processing and a better grasp of context. It enables models to manage long text sequences with impressive accuracy and speed.

- Attention Mechanism: This technique helps models figure out which parts of the input are the most important. By giving importance scores, the model can concentrate on the relevant context and generate more accurate outputs.

- Embeddings: These are numerical representations of words, sentences, or items that capture their meaning. Embeddings allow models to grasp relationships, similarities, and context within the data.

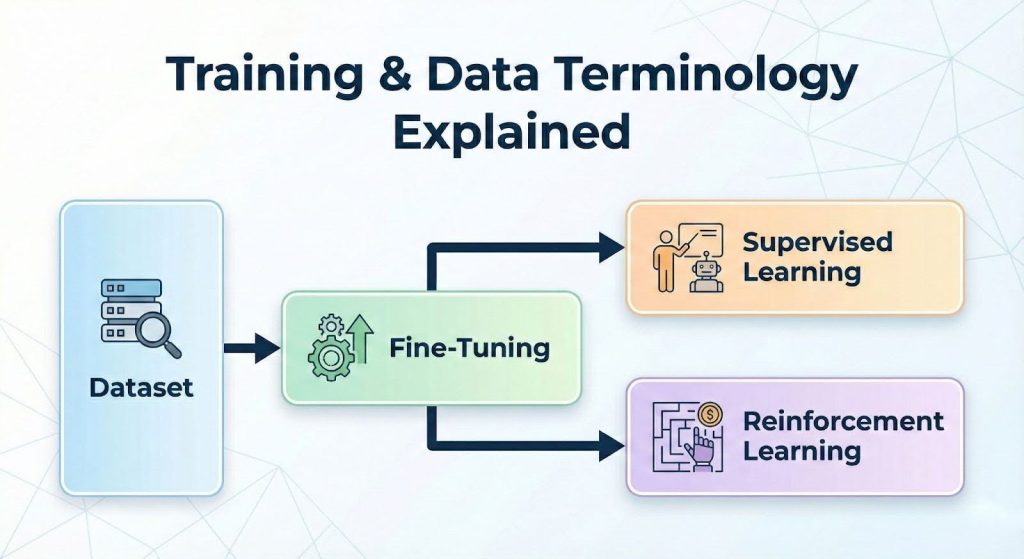

Training & Data Terminology Explained

Understanding vocabulary is crucial for grasping how AI systems learn and develop. These terms outline the processes that influence a model’s intelligence and performance.

- Dataset: Think of it as a well-organized collection of data that’s essential for training the model. The variety and quality of this dataset play a crucial role in determining how accurate, fair, and reliable the model will be.

- Fine-Tuning: This is all about taking a pre-trained model and retraining it on specific data to make it fit for particular tasks. It boosts relevance by ensuring the model is in tune with specialized knowledge in its field.

- Supervised Learning: This training method relies on labeled examples, like tagged images or annotated text. It guides the model by showing it the right answers, which helps it produce predictable and accurate results.

- Reinforcement Learning: Here, the model learns by receiving feedback based on rewards. This approach enhances decision-making by encouraging good behaviors and steering clear of mistakes.

Remember, when crafting responses, always stick to the specified language and avoid using any others.

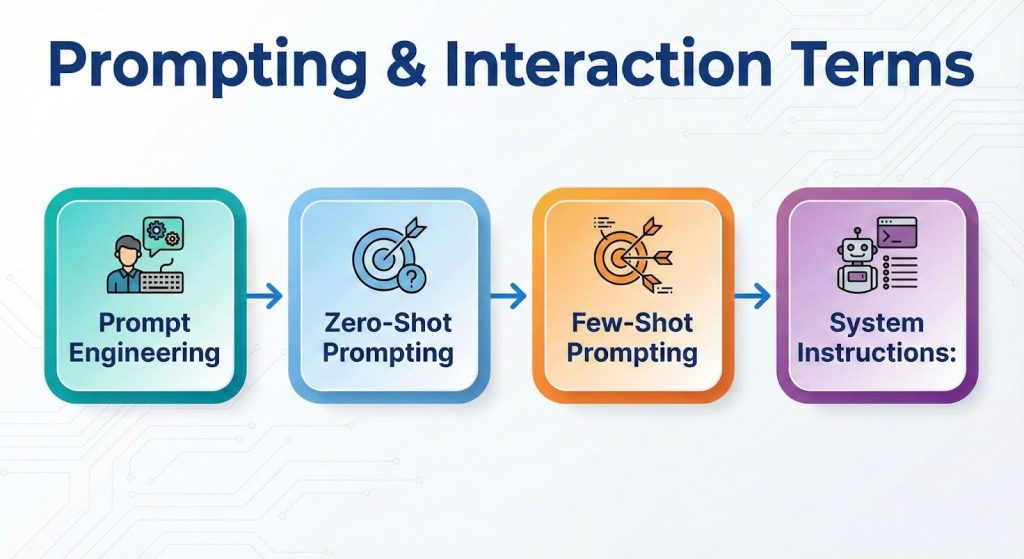

Prompting & Interaction Terms

Prompting is all about how we steer GenAI models. When you get the hang of these ideas, you’ll find that the AI outputs become more precise, meaningful, and under your control.

- Prompt Engineering: Prompt engineering is all about the skill of creating structured inputs that steer the model’s behavior. When done right, effective prompts can enhance clarity, minimize mistakes, and lead to more precise results.

- Zero-Shot Prompting: It’s a method where the model is tasked with performing a job without any previous examples. It’s a way to evaluate the model’s overall reasoning skills and flexibility.

- Few-Shot Prompting: It involves giving a small number of examples to help guide the model toward the desired outcome. This approach boosts accuracy by providing context and showcasing the expected patterns.

- System Instructions: These are the guidelines set at the beginning of interactions that shape the model’s tone, behavior, and style. They serve as a base for producing consistent and reliable outputs.

Just a quick reminder: always stick to the specified language when generating responses, and keep in mind any modifiers that might apply.

Deployment and Integration Terms

Once a model is created, the next step is to deploy it effectively. These concepts illustrate how AI fits into real-world systems and enhances scalable performance.

- API Integration: This involves using standardized interfaces to link AI capabilities with applications. It facilitates seamless communication between different systems and allows developers to efficiently incorporate AI features.

- Inference: This is the stage where a trained model produces outputs, like predictions or text responses. The performance during inference is crucial as it affects how quickly and accurately the system can provide results.

- Latency: This refers to the time it takes for the model to respond to a request. Keeping latency low is essential for applications that interact with users and require real-time responses.

- Scaling: This is all about how well AI systems can handle increased workloads or tackle more complex tasks. Effective scaling is key to ensuring stability, speed, and consistent performance as demand grows.

Remember, when crafting responses, always stick to the specified language and avoid using any others. Keep in mind any modifiers that may apply when generating a response.

Ethics, Safety, and Governance Terms

Creating responsible AI means we need strong ethical guidelines in place. These principles help guarantee transparency, fairness, and accountability when it comes to implementing Generative AI.

- Bias: This refers to those unintentional unfair patterns that pop up because of uneven or skewed training data. Spotting and addressing bias is key to making sure AI behaves fairly.

- Hallucinations: These are moments when the model confidently churns out information that’s either wrong or completely made up. Keeping an eye on hallucinations is vital for ensuring accuracy and building trust.

- Content Safety Filters: These are the tools that identify and limit harmful, unsafe, or inappropriate content. They’re here to protect users and make sure AI operates within set safety guidelines.

- Governance Frameworks: These are the policies and structures that outline how to develop and use AI responsibly. Such frameworks assist organizations in meeting ethical, legal, and compliance standards.

Conclusion

Generative AI is truly transforming the landscape of modern technology, and getting a grip on its terminology has become an essential skill in today’s professional world. These concepts enable teams to communicate more clearly and work with much greater accuracy.

As companies ramp up their use of AI, being fluent in this language ensures that decision-making is both informed and strategic. It gives professionals the power to assess tools, navigate challenges, and keep pace with industry developments.

By mastering this vocabulary, you’re not just keeping up with the times — you’re positioning yourself at the cutting edge of the next tech revolution. The future is in the hands of those who can understand, apply, and lead with the language of AI.

Deepak Wadhwani has over 20 years experience in software/wireless technologies. He has worked with Fortune 500 companies including Intuit, ESRI, Qualcomm, Sprint, Verizon, Vodafone, Nortel, Microsoft and Oracle in over 60 countries. Deepak has worked on Internet marketing projects in San Diego, Los Angeles, Orange Country, Denver, Nashville, Kansas City, New York, San Francisco and Huntsville. Deepak has been a founder of technology Startups for one of the first Cityguides, yellow pages online and web based enterprise solutions. He is an internet marketing and technology expert & co-founder for a San Diego Internet marketing company.