Table of Contents

Introduction

Artificial Intelligence is everywhere today—from the apps that finish our sentences to the cars that can drive themselves. But none of these smart systems would work without one key piece of technology: AI chips.

In today’s AI economy, chips are more than silicon—they’re strategic weapons. At stake isn’t just performance, it’s billions in revenue, national competitiveness, and which companies will shape the next decade of innovation.

As AI grows more powerful, traditional computer chips can’t keep up. That’s why companies like NVIDIA, AMD, Intel, Google, and Apple are investing heavily in building chips that are faster, more efficient, and capable of training huge AI models.

AI chips are specially designed processors that handle the massive amounts of data and fast calculations AI needs to function. They’re the reason tools like ChatGPT can think quickly, why your phone recognizes your voice, and how robots make decisions in real time.

Key stats

- The AI chip market was valued at $20 billion in 2020 and is projected to exceed $300 billion by 2030

- NVIDIA currently leads the AI GPU segment with an estimated 86% market share.

- More than 80% of enterprise workloads will be AI-driven by 2028, per IDC

- GPUs (Graphics Processing Units) hold the largest market share (around 46.5% in 2025)

In this article, we’ll dive into the heart of the AI chip wars—who’s winning, what’s really at stake, and why this battle will influence the future of technology, global business, and even geopolitics.

What Are AI Chips?

AI chips are specialized processors designed to handle the intense computational needs of artificial intelligence.

Unlike traditional CPUs that perform general tasks, AI chips are optimized for machine learning, deep learning, and massive parallel processing. They enable the quick training of complex models and the efficient execution of AI applications.

- CPU: The main chip in a computer. Good at many tasks but slow for AI.

- GPU: Designed for graphics, but great for AI because it handles many calculations at once.

- TPU: Google’s chip made specifically for deep-learning math.

- ASIC: A chip built for one specific AI task, extremely fast and efficient.

- FPGA: A flexible chip that can be reprogrammed for different AI needs.

Key Features of AI Chips

AI chips differ from regular processors in several important ways:

AI chips stand apart from traditional processors because they’re built specifically for speed, efficiency, and scalability in machine learning:

- Massive Parallelism: They process countless operations simultaneously, ideal for neural networks and deep learning.

- High Throughput: Capable of digesting huge volumes of data quickly.

- Low Latency: Essential for real-time applications like autonomous driving, robotics, and medical imaging.

- Efficient Memory Access: AI models need rapid movement of data between memory and compute cores, and these chips minimize bottlenecks.

Without these optimizations, training modern AI models would take years—or wouldn’t be possible at all. AI chips are the engines powering today’s intelligence revolution.

Growth of AI and Its Impact on Chip Demand

AI is everywhere today—from voice assistants to AI-generated art—and it’s driving massive demand for one thing: powerful AI chips.

- AI has become a daily necessity, not a future concept.

- McKinsey reports that adoption has more than doubled since 2017, with businesses in every industry using AI to stay competitive.

- Behind all these tools and innovations is the hardware that makes them possible: high-performance AI chips.

Leading Companies in the AI Chip War

With AI chip demand accelerating, the race to power modern artificial intelligence has expanded beyond traditional semiconductor leaders to include cloud hyperscalers and even social media firms designing custom silicon for massive AI workloads. As a result, AI wars are no longer just technology competitions—they have evolved into geopolitical contests that influence national security, supply chains, and global economic power.

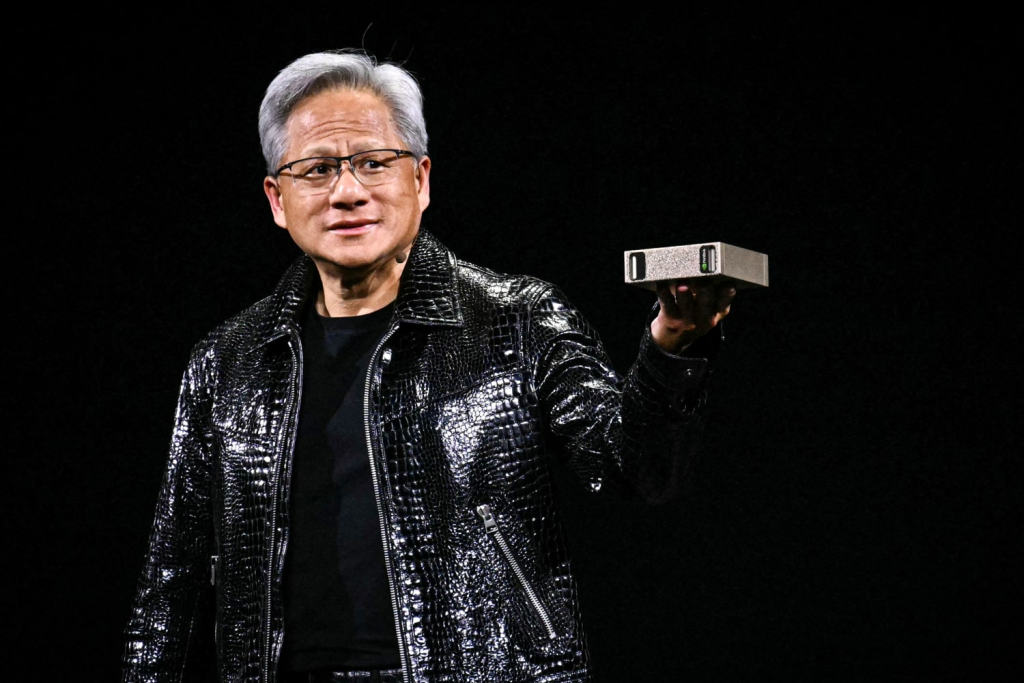

Behind every breakthrough in AI hardware lies a leadership decision—often made years before the market fully understood its importance. The AI chip wars are not only a contest between technologies or corporations; they are shaped by visionary leaders, strategic pivots, and high-stakes bets that have redefined entire industries.

NVIDIA

When Jensen Huang co-founded Nvidia, the company was largely known for powering video games. What set Huang apart was not just engineering talent, but strategic foresight. Long before artificial intelligence became a boardroom priority, Huang recognized that GPUs—originally designed for graphics—could become the backbone of accelerated computing.

- Rather than treating AI as a side opportunity, Nvidia invested deeply in software ecosystems like CUDA, built long-term relationships with researchers, and aligned its hardware roadmap with future AI workloads.

- That decision transformed Nvidia from a niche graphics company into the central infrastructure provider for the global AI economy, earning Huang recognition as one of the most influential technology leaders of the era.

For business leaders, Nvidia’s story is a lesson in seeing beyond immediate markets and committing early to platforms that create long-term competitive advantage.

NVIDIA currently leads the AI chip market, driven by its high-performance GPUs such as the A100 and H100, widely used to train and deploy advanced models like GPT-4.

- Its upcoming B100 is expected to deliver even greater speed and efficiency.

- A key advantage is the CUDA software ecosystem, which enables developers to optimize AI workloads specifically for NVIDIA hardware.

- This combination of hardware and software has resulted in rapid growth, with data center revenue exceeding $14.5 billion in Q3 2025.

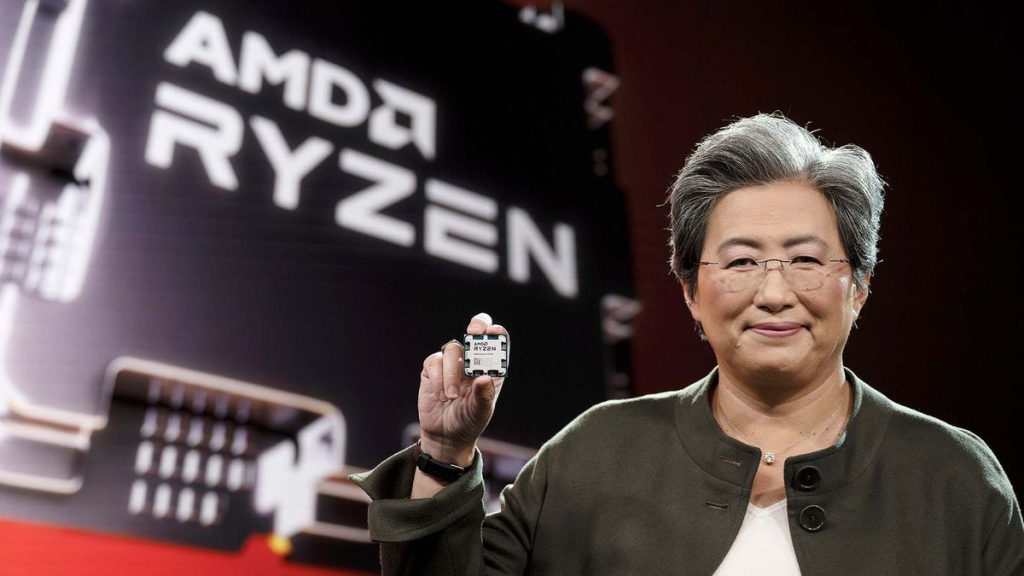

AMD

AMD has become a strong competitor, gaining significant traction with its MI300 series.

- The MI300X offers 192 GB of HBM3 memory and robust performance for both training and inference.

- AMD emphasizes open standards and partnerships with cloud providers, supported by its ROCm software stack.

- While it does not yet match NVIDIA’s market dominance, AMD’s rapid progress positions it as a serious contender.

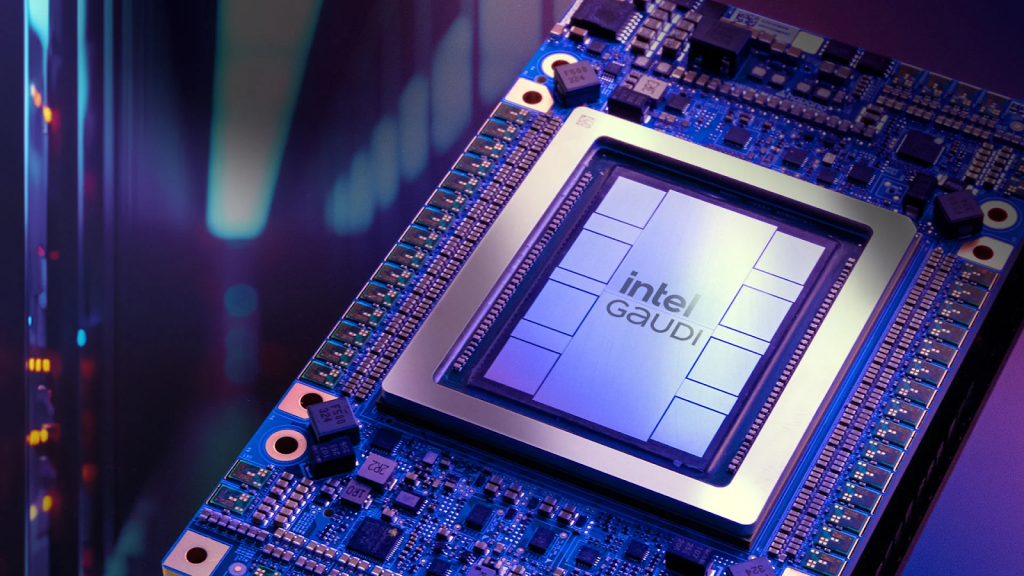

Intel

Intel is expanding into AI with its Gaudi 2 and Gaudi 3 chips, targeting data center training and inference workloads.

- Beyond designing its own chips, Intel is investing heavily in its Foundry Services to manufacture chips for other companies, including AI startups.

- The company is also exploring emerging technologies such as neuromorphic and optical computing as long-term bets in the AI space.

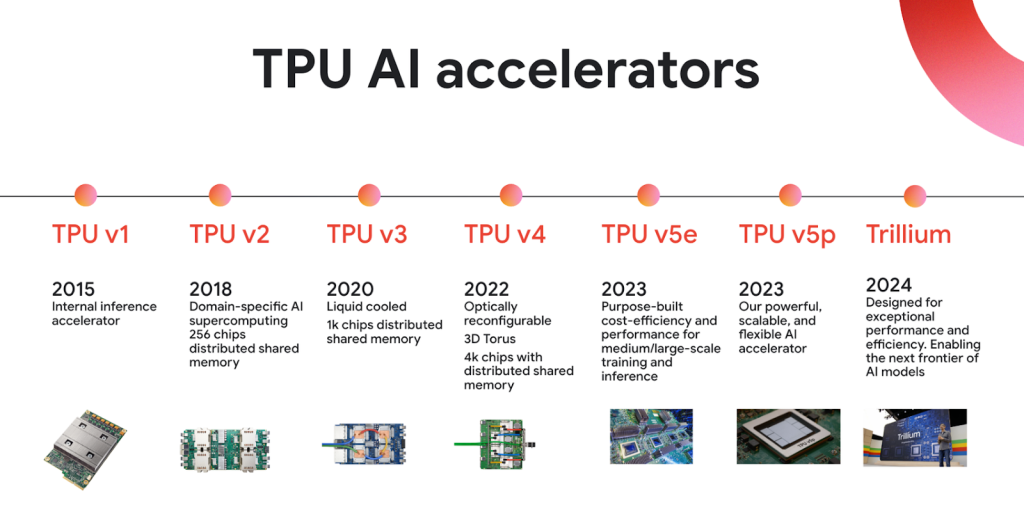

Google pioneered custom AI hardware with its Tensor Processing Units (TPUs), initially developed for internal use.

- Today, TPUs power core products like Search, Translate, and various Google Cloud AI services.

- The latest TPU v5 is optimized for large-scale model training and supports Google’s most advanced AI systems, including PaLM.

- Google’s AI-first strategy and custom silicon give it a notable competitive advantage.

Apple

Apple has quietly integrated advanced AI accelerators—Neural Engines—across its product lineup, including iPhones, iPads, and Macs.

These enable fast, private, on-device AI processing. While Apple is not focused on training massive AI models, it plays a key role in advancing edge AI for consumer devices.

Microsoft

Microsoft, traditionally reliant on NVIDIA, recently introduced its own AI chips:

- Maia for high-performance training

- Athena for efficient inference

These chips will support Microsoft Azure’s AI infrastructure and strengthen integration with OpenAI. The move reflects Microsoft’s goal of controlling more of its AI hardware stack.

Amazon (AWS)

AWS is also reducing dependence on third-party chip makers by developing its own AI processors:

- Trainium for model training

- Inferentia for inference

These chips offer improved price-performance for cloud customers and help AWS scale its AI services more efficiently.

Meta

Meta has entered the AI chip space to support its large internal workloads, including LLMs, recommendation algorithms, and metaverse applications.

Its MTIA (Meta Training and Inference Accelerator) is designed to lower reliance on external vendors and optimize performance for Meta’s open-source LLaMA models.

AI Chip Startups

The AI chip startup ecosystem is highly active, with numerous companies developing innovative solutions for diverse applications, from data centers to edge devices.

Notable AI chip startups include:

- Groq: Known for its Language Processing Unit (LPU) inference engine, which delivers ultra-fast, low-latency AI inference, making it a major player in the AI cloud and chip producer category.

- Cerebras Systems: It has developed the Wafer-Scale Engine (WSE), the largest computer chip ever built, to accelerate deep learning models and provide unparalleled computational power for AI research.

- Tenstorrent: A Canadian startup developing high-performance AI processing chips and IP based on the RISC-V architecture for data centers and the automotive industry. The company is led by chip design veteran Jim Keller.

- SambaNova Systems: Offers an AI cloud and chip-producing solution focusing on high-performance, integrated hardware and software platforms for large-scale AI.

- Semron: A German startup developing CapRAM, an in-memory computing technology that uses 3D stacking to run generative AI models directly on mobile and edge devices with high energy efficiency.

These startups challenge giants like NVIDIA, AMD, and Intel by developing new architectures and specialized solutions—such as edge AI, optical, and neuromorphic chips—while using cloud-based design tools to move faster to market.

This evolution raises two critical questions every business leader must now confront.

Why should CFOs care about AI chips?

Because AI chip choices now drive some of the largest capital expenditures in modern enterprises—directly impacting data-center build costs, energy consumption, hardware refresh cycles, and overall total cost of ownership (TCO), often amounting to millions of dollars over the lifecycle of AI infrastructure.

What do the AI chip wars mean for partnerships and outsourcing?

As cloud leaders like Microsoft and AWS build custom silicon, the shift signals a move toward vertical integration as a core strategy—reducing reliance on third-party vendors, gaining pricing leverage, and reshaping traditional outsourcing and partnership models beyond simple product development.

Energy Companies - Power Behind AI

Energy companies are increasingly critical partners in powering the AI revolution by providing the vast, consistent power required for AI data centers. Key Energy Providers and Their AI Partnerships include:

Constellation Energy

- Constellation, a major U.S. producer of carbon-free nuclear energy, signed a 20-year agreement to power Microsoft’s AI data centers.

- The deal includes energy from the revitalized Three Mile Island facility, now called the Crane Clean Energy Center.

- Microsoft is also partnering with nuclear startups such as Oklo and Helion to support its long-term AI power needs.

Bloom Energy

This company provides on-site, solid-oxide fuel cells that can run on natural gas, biogas, or hydrogen, allowing data centers to generate their own power and avoid grid delays. They have major partnerships with:

- Oracle: To power Oracle AI data centers.

- Equinix: To deploy fuel cells at multiple data centers.

- Brookfield: A $5 billion strategic partnership to power new “AI factories” globally.

Crusoe Energy

Crusoe is an “AI factory” company that builds and operates high-performance GPU clusters, utilizing otherwise flared natural gas and other environmentally aligned power sources (wind, solar, etc.) for its operations. They partner with energy companies like ExxonMobil and Equinor.

NextEra Energy

As one of the world’s largest renewable utilities, NextEra Energy is building solar, wind, and battery storage infrastructure to help hyperscalers meet their clean energy targets for their data centers.

GE Vernova

This company is dominant in power generation and infrastructure and is actively working with hyperscalers to provide the necessary turbines and grid solutions for the massive power needs of AI data centers.

- Saudi Aramco: The oil giant signed an MoU with AMD to explore using AI for energy industrial workloads and is investing in data center development for AI.

Leading Applications & Infrastructure projects powered by AI Chips

Modern AI models don’t just need powerful chips—they require entire data centers built around AI infrastructure. These applications and infrastructure projects rely on massive compute clusters powered by GPUs, TPUs, and AI-powered chips.

Hyperscaler

Hyperscalers are companies that operate massive, hyperscale data centers built for large-scale cloud computing, storage, and networking.

- Examples include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

- Hyperscale data centers typically contain 5,000+ servers and vast floor space to process enormous volumes of data.

- They offer flexible cloud resources through Infrastructure as a Service (IaaS), enabling businesses to scale compute power up or down instantly.

In the AI chip race, hyperscalers are the critical backbone, providing the compute, networking, and energy capacity needed to train frontier AI models.

ChatGPT & OpenAl

ChatGPT and GPT-4 rely on thousands of NVIDIA A100 and H100 GPUs running in parallel. Training GPT-4 reportedly required:

- Tens of thousands of high-end GPUs

- Continuous operation across multiple data centers

- Massive cooling and power infrastructure

Project Stargate

OpenAI and Microsoft, along with partners including SoftBank and Oracle, are involved in planning and building a series of large-scale AI data centers and supercomputers collectively known as the Stargate project.

Stargate is expected to include:

- Millions of advanced AI chips, including future Nvidia GB200 systems

- Gigawatt-level power requirements to support large-scale AI workloads

Google Gemini

Google’s Gemini models run primarily on TPU v4 and TPU v5 pods—Google’s custom AI chips optimized for deep learning. A single TPU v5 pod can contain:

- Hundreds of interconnected chips

- Custom high-bandwidth networking

- 10× faster training than earlier TPU generations

Gemini’s development shows how vertically integrated AI hardware gives companies faster iteration cycles and lower training costs.

Anthropic Claude

Anthropic trains its Claude models on large-scale clusters of NVIDIA GPUs across AWS data centers. Key elements of its infrastructure include:

- AWS Trainium and Inferentia experiments for cost reduction

- Distributed training across thousands of GPUs

- Safety-focused simulations require extensive computing

Claude’s compute needs helped drive the creation of a new $4B AWS investment (2023–2024), demonstrating how AI models influence cloud hardware development.

Perplexity AI

Perplexity uses a mix of:

- High-performance NVIDIA GPUs

- Custom retrieval systems requiring large memory bandwidth

- Distributed inference nodes for fast response times

Unlike pure LLMs, Perplexity integrates search + AI, which makes real-time inference performance critical. This places unique demands on GPU memory, latency, and networking.

Grok (xAI)

Elon Musk’s xAI trains Grok on a dedicated cluster of NVIDIA H100 GPUs, with plans to expand to the next-generation Blackwell B100 chips. xAI aims to build:

- One of the world’s largest GPU clusters (100,000+ units)

- A training pipeline optimized for rapid model iteration

- Supercomputing performance comparable to top research labs

Grok’s infrastructure highlights the arms race for larger, faster GPU farms.

Humane AI Pin: Edge + Cloud Hybrid AI

Humane’s AI Pin depends on edge processors for on-device inference and cloud GPUs for complex tasks. Its model shows emerging trends:

- Smaller, efficient edge AI accelerators

- Offloading heavy computation to cloud GPU clusters

- Bridging real-time AI with wearable devices

This hybrid approach represents the future of consumer AI hardware.

Meta LLaMA

Meta trains LLaMA models on huge GPU clusters and is now developing MTIA (Meta Training & Inference Accelerator) to reduce reliance on NVIDIA. Meta’s infrastructure includes:

- GPU clusters with tens of thousands of units

- Custom networking for large-scale parallelism

- Proprietary AI accelerators for inference

Meta demonstrates how tech giants use AI chips both to scale models and control internal costs.

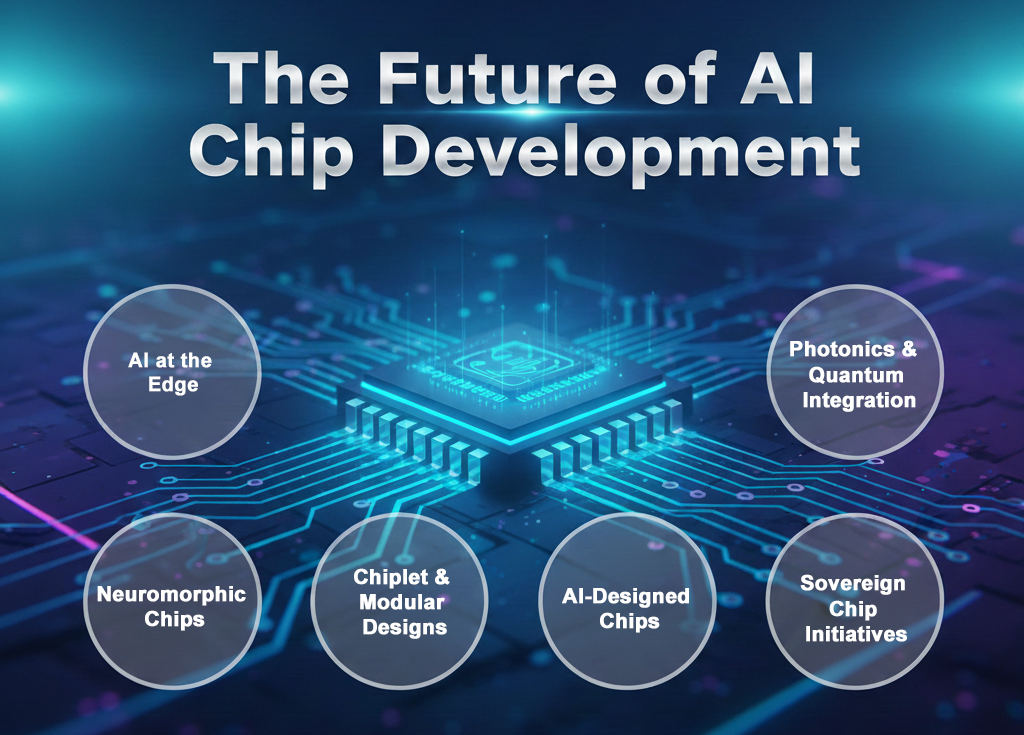

The Future of AI Chip Development

- AI at the Edge: More AI processing on devices (phones, cars, IoT) for lower latency, better privacy, and reduced cloud reliance.

- Neuromorphic Chips: Brain-inspired hardware for ultra-low power, real-time learning, and adaptive behavior.

- Chiplet & Modular Designs: Mix-and-match chip components for better yields, flexibility, and customization.

- AI-Designed Chips: AI is used to optimize chip layouts and architectures, speeding up development and improving efficiency.

- Sovereign Chip Initiatives: Countries investing in local manufacturing to reduce dependence on foreign fabs.

- Photonics & Quantum Integration: Early but promising technologies using light or quantum effects to accelerate specific AI workloads.

AI chips are the engines of the intelligence era, powering everything from large language models to automation, cybersecurity, and smart devices. Demand for faster, more efficient chips will only intensify.

In the next five years, a handful of decisions—strategic alliances, manufacturing investments, and chip designs—will determine which companies lead the intelligence economy. The winners won’t just have faster silicon; they’ll have better business models, ecosystems, and strategic foresight.

Deepak Wadhwani has over 20 years experience in software/wireless technologies. He has worked with Fortune 500 companies including Intuit, ESRI, Qualcomm, Sprint, Verizon, Vodafone, Nortel, Microsoft and Oracle in over 60 countries. Deepak has worked on Internet marketing projects in San Diego, Los Angeles, Orange Country, Denver, Nashville, Kansas City, New York, San Francisco and Huntsville. Deepak has been a founder of technology Startups for one of the first Cityguides, yellow pages online and web based enterprise solutions. He is an internet marketing and technology expert & co-founder for a San Diego Internet marketing company.