Definition of A/B Testing

A/B Testing, often referred to as split testing, is a method of controlled experimentation that helps compare two or more variations of a digital asset to see which one performs better. This process involves splitting users into different groups, with each group experiencing a unique version of a webpage, advertisement, email, or app feature.

To gauge performance, specific metrics like click-through rates, conversion rates, or engagement levels are used.

The main aim of A/B Testing is to make informed, data-driven decisions by pinpointing which variation yields the best results. Instead of relying on gut feelings or assumptions, businesses turn to A/B Testing to validate their changes based on actual user behavior.

Purpose of A/B Testing

A/B Testing is all about boosting performance and cutting down on the guesswork in decision-making. By trying out different variations before rolling them out on a larger scale, organizations can lower their risks and enhance their effectiveness.

Here are some common goals of A/B Testing:

- Increasing conversion rates

- Improving user engagement

- Reducing bounce rates

- Enhancing user experience

With A/B Testing, you can be confident that any changes you make are supported by solid data instead of just hunches.

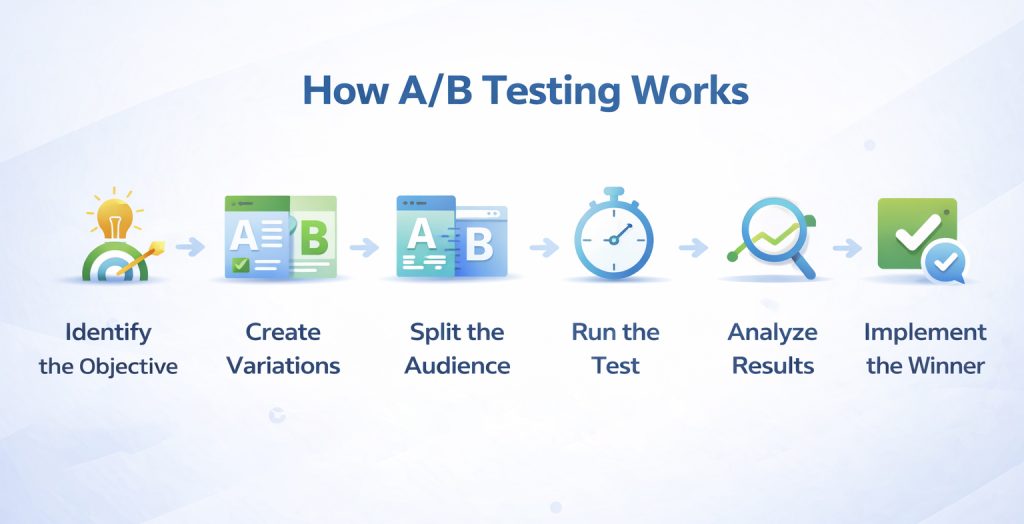

How A/B Testing Works

The A/B Testing process is all about following a clear and organized approach to ensure that the results are both accurate and reliable:

- Identify the Objective: Start by setting a specific goal, like boosting sign-ups or enhancing ad clicks.

- Create Variations: Develop two versions: the control (Version A) and the variation (Version B). Make sure to change just one element to keep the test valid.

- Split the Audience: Randomly divide users so that each group experiences a different version under similar conditions.

- Run the Test: Conduct the test for a set period to gather enough data.

- Analyze Results: Look at the performance metrics to see which version did better.

- Implement the Winner: Roll out the higher-performing version across the platform.

Types of A/B Testing

- Website A/B Testing: This is all about experimenting with different elements like headlines, call-to-action buttons, layouts, images, or overall page designs to boost user engagement and conversions.

- Email A/B Testing: Here, the focus is on tweaking subject lines, content, visuals, send times, and where the call-to-action buttons are placed to enhance open and click-through rates.

- Ad A/B Testing: Commonly used on platforms like Google Ads or social media, this involves testing different versions of ad copy, visuals, targeting strategies, or landing pages.

- Product A/B Testing: Product teams rely on A/B Testing to assess changes in features, user interface tweaks, or onboarding processes within their applications.

Key Elements Commonly Tested

A/B Testing is super versatile and can be used on a variety of digital elements, such as:

- Headlines and subheadings

- Call-to-action buttons

- Page layouts and navigation

- Images and videos

- Pricing displays

- Form length and structure

- Email subject lines

- Ad creatives and messaging

The key to effective testing is to focus on one variable at a time, which helps in drawing accurate conclusions.

Advantages of A/B Testing

A/B Testing brings a host of benefits for businesses and marketers alike:

- Data-Driven Decisions: It helps you move away from guesswork.

- Improved Performance: You can pinpoint which variations really shine.

- Lower Risk: You get to test changes before rolling them out completely.

- Enhanced User Experience: It ensures your designs resonate with what users want.

- Cost Efficiency: It makes the most of your marketing and development budgets.

These perks make A/B Testing an essential part of any digital optimization strategy.

A/B Testing vs Multivariate Testing

When it comes to A/B Testing, you’re looking at a method that compares two or more versions with just one variable changed. On the other hand, multivariate testing dives deeper by assessing multiple variables at once.

A/B Testing is straightforward, needs less traffic, and is perfect for targeted optimization. In contrast, multivariate testing is a bit more intricate and demands a lot more data.